Blog How MLOps decreases friction and promotes the safe scaling of AI

By Insight Editor / 26 Jun 2020 / Topics: DevOps , Featured , Cloud

This year in a McKinsey survey it was stated that there has been a 25% year-on-year increase in the use of AI across standard business processes. These organisations enjoyed an increase in revenues or more frequently, a reduction in costs, ultimately leading to positive effects on their bottom line. While these figures are encouraging, the survey also revealed another insight; the gap between the high performers and the rest of the pack is widening. Supporting this insight, HBR article “A Radical Solution to Scale AI Technology” surveyed over 1,500 C-suite executives across 16 differing industries to understand their need to scale AI across the enterprise. While 84% knew they needed to scale AI to meet growth objectives, only 16% of them have moved past experimenting with AI. Of this 16%, only a portion of these organisations were able to both jump the hurdle from Proof-of-Concept to Production - and scale their initiatives.

Why weren't all organisations able to make the jump? Take your pick - misalignment of the business and AI strategies, organisational teams not structured to foster AI ways-of-working, not enough training in Data Analysis and AI or even the absence of change management could all be reasons. One part of the puzzle that is also missing for organisations wanting to make the jump to scaling AI is the use of the tools and methodologies of 'Machine Learning and Operations'. Better known as MLOps.

What is MLOps?

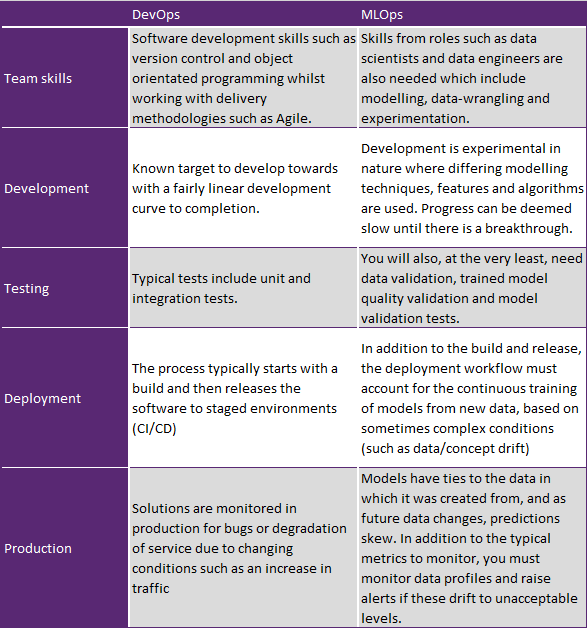

MLOps takes the well-known approaches of DevOps and applies it to a line of work that is experimental and complex, and ensures enterprises can leverage data science in a highly compliant and regulated environment. Let us use the finance sector for example, through machine learning, lenders are determining credit scores for customers by mining third-party datasets and delivering these insights in a matter of seconds back to stakeholders. While this would not surprise many, what happens if someone asks how they landed on a particular credit score? For this approach to be compliant banks must be able to explain and trace a models behaviour in production. This would mean at the very least have the ability to reproduce experiments and artefacts (such as models), versioning of the models, handling bias, creating linage (tracing models right back to the data used for training) and enabling a capability to monitor how models are performing in production. All this, while managing potentially thousands of models. MLOps helps organisations achieve this level of scale within a highly sensitive and governed environment.

Example MLOps platforms:

- Uber’s Micheangelo: https://eng.uber.com/michelangelo-machine-learning-platform/

- Facebook’s FBLearner: https://engineering.fb.com/core-data/introducing-fblearner-flow-facebook-s-ai-backbone/

Why is it hard?

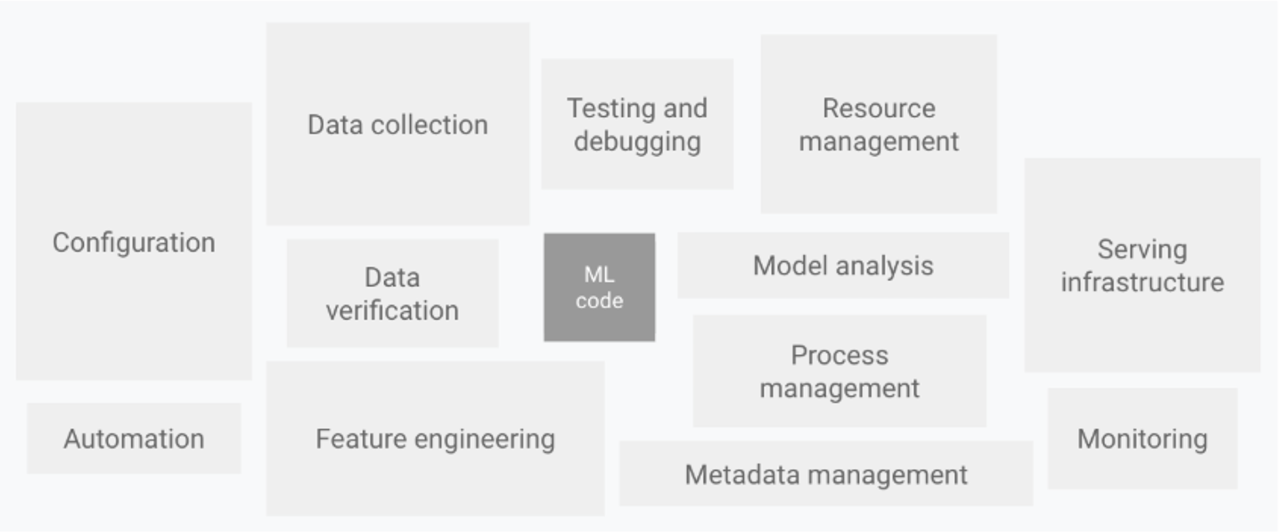

Google’s visual description of the ‘The Machine Learning System’ is a great way to appreciate the challenges in building an MLOps architecture.

Figure 1 - Google - The Machine Learning System

Organisations who are starting their AI journey may be solely focussed on the machine learning itself. In the real world, this may look like a Data Scientist working on their own laptop, with their own tools while using notepad or MS Word to document how their experimentation is going. In a Production scenario, not only will this not be suffice, there is now the added challenge in serving infrastructure, monitoring of the models, re-training of the models and process management to name a few. How do you scale your AI initiatives while taking all these extra concerns into account?

Skill-sets also play a part in the difficulty to scale. Whilst Data Scientists come from an experimental background, they will typically lack the software engineering practices of a software engineer which includes version control, collaboration, and DevOps practices. Conversely, Software Engineers lack the modelling and analytical skills of a Data Scientist. The outcome of this skill-set mismatch means for an organisation to get a data science model from Proof-of-Concept into Production it turns into a multi-business-unit affair. This will see multiple hand-offs and meetings, creating delays and loss of context between the team – how would a software engineer know how to handle a deployment issue that is related to the model itself?

Figure 2 - DevOps vs MLOps (based on Google's 5 dimensions)

What does MLOps look like?

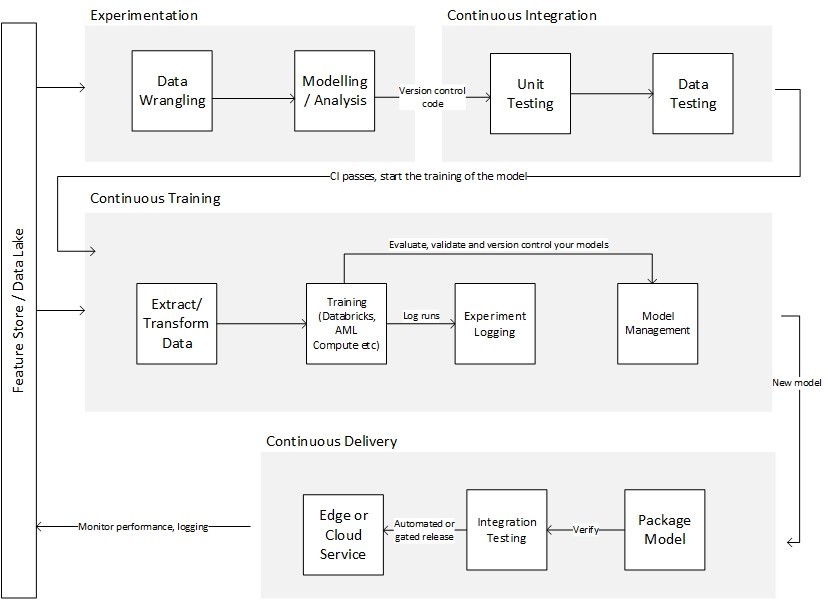

As we take a step lower and dive into the detail, an MLOps workflow may look like the below. Both Microsoft and Google have their own representations of this life-cycle.

Figure 3 - Sample MLOps workflow

The highlight features of an MLOps platform are:

Experimentation. As Machine Learning Engineers experiment over data, their progress is automatically logged (think code artifacts, training accuracy metrics) and can be compared to other runs.

Continuous Integration. As code is checked-in to a code repository, it is run against various unit tests, data tests and integration tests.

Continuous Training. As new data or code artefacts are generated, the training workflow is automatically triggered and run through various tests to understand it’s performance against current models.

Continuous Deployment. As models pass various validity tests, they can be automatically or semi-automatically promoted through various test-prod environments. Models can also be provisioned through various deployment workflows such as Blue-Green Deployment and Canary Deployment.

Monitoring. Models in production must be continuously monitored for auditing requirements and machine learning specific requirements such as concept drift (the drift in input data, causing the prediction to skew). Feedback loops must remain tight, keeping true to the DevOps principles.

Where to from here?

Aligning to a Machine Learning platform will give you the greatest opportunity for success. MLOps platforms have been growing rapidly in support with services such as Azure Machine Learning Services (Microsoft) and MLFlow (Databricks) paving the way. Many of our customers have been mixing these services based on their alignment to open-source or cloud vendors. The key here is to look for services that are interoperable with one another and offer deployment approaches such as containers that can be deployed both in the cloud and on the (intelligent) edge.

At Insight, we’ve had great success in leveraging the technologies of Azure Machine Learning Services, MLFlow, Azure DevOps and Microsoft Teams to create fully automated MLOps workflows that enable organisations to accelerate AI. If you’d like to know more, please reach out!

References

https://hbr.org/2020/03/how-high-performing-companies-develop-and-scale-ai

https://azure.microsoft.com/en-us/services/machine-learning/mlops/

About the Author: