Tech Journal MLOps: The Key to Unlocking AI Operationalisation

By Amol Ajgaonkar / 29 Jul 2021 / Topics: Artificial Intelligence (AI) , DevOps , Digital transformation

Realising the true value of Artificial Intelligence (AI) takes much more than simply building a model. So, how can modern organisations get from pilot to production?

Today, two-thirds of executives cite AI as vital to the future of their business, with plans to increase investments this year. As a result, IDC reports the global AI market is forecast to grow 16.4% year over year, reaching a value of $327.5 billion in 2021 alone.

The reasons for this are clear. AI has moved beyond the realms of experimentation to provide demonstratable value for today’s organisations, resulting in significant top- and bottom-line improvements. When these 1% improvements are multiplied by increasing the repeatability and scalability of AI solutions, greater process optimisation, cost savings and higher revenue follow.

But equal and opposite to the potential benefits of AI is the harsh reality that, according to Gartner, just 53% of AI Proof of Concepts (PoCs) are ever scaled to production. And fewer manage to deliver the intended, measurable business value.

So, why the disconnect?

The last-mile challenge

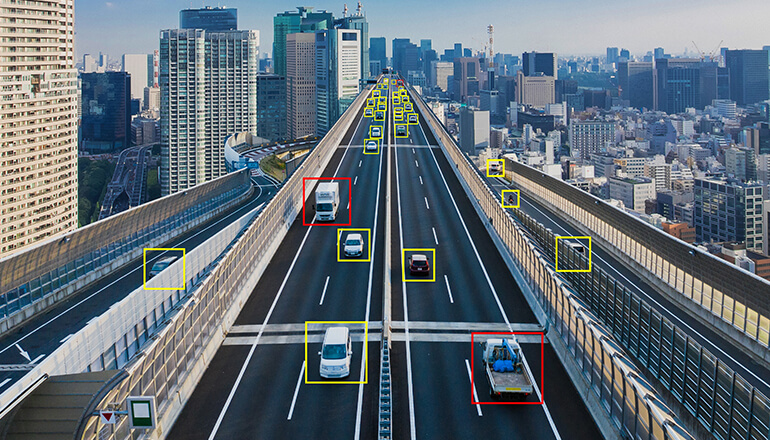

Given the proper data and compute resources, most data scientists have the skills to design an AI model fairly easily. But when it comes to deploying and managing AI in production — organisations often underestimate the difficulties of handing off a project to IT.

IT teams may not fully understand the complexity of implementing AI. If they do manage to platform a model as a microservice, nanoservice or a piece of software, they’re often unprepared to support the unique deployment patterns associated with monitoring, back-testing, retraining, modifying or updating that model. Lack of upfront collaboration and high-level ownership throughout this process can further exacerbate extended timelines and build internal frustrations.

As a result, many projects fail to make it from PoC to production.

In data science, this phenomenon is known as the “last-mile challenge” — although it typically occurs just two-thirds of the way through the AI lifecycle. In fact, it’s often only the first major hurdle to fully operationalising a solution.

So, what sets today’s AI leaders apart from those organisations still struggling to get a pilot off the ground?

Navigating the AI lifecycle

The key to overcoming the “last-mile challenge” lies in the strategic management of the AI journey.

McKinsey reports AI adopters with a proactive strategy achieve significantly higher profit margins — between 3% and 15% above the industry average. Rather than addressing operationalisation as an afterthought, organisations that successfully transform through AI are those that plan holistically beyond just the data science.

The Cross-Industry Standard Process for Data Mining (CRISP-DM) provides a model for conceptualising the ongoing AI lifecycle.

This cycle begins with the identification of measurable business value, as well as planning for how the solution will be maintained over time. With the end goal in mind, data scientists evaluate, collect and prepare data for training. Once a Machine Learning (ML) model has been trained and tested, they take the time to ensure it meets business acceptance criteria before deploying and monitoring in production.

Post deployment, AI is integrated back into business systems and processes in a way that successfully transforms the organisation. The cycle resumes with new understandings of the business in light of the transformation, identifying the need for new applications, new software and new AI to be developed in support of that evolution.

Successfully enabling this continuous lifecycle and ensuring the delivery of business value requires an effective strategy for AI operationalisation. This is where MLOps comes into play.

McKinsey reports AI adopters with a proactive strategy achieve significantly higher profit margins — between 3% and 15% above the industry average.

What is MLOps?

MLOps, or machine learning operations, refers to the process and tooling of consistently developing, deploying and maintaining reliable, responsible AI. By applying the broad concepts and principles of DevOps to machine learning, MLOps help organisations understand, manage and scale the holistic data lifecycle through repeatable processes. Like DevOps, MLOps advocates for an emphasis on automation, collaboration and continuous feedback.

What’s the difference between MLOps and AIOps? While MLOps is the application of DevOps to improve the development of AI, AIOps is the application of artificial intelligence to improve IT operations.

When organisations first begin developing AI, processes tend to be highly manual, complex and siloed between teams. This reduces the agility with which new iterations can be developed, retrained and released. Continuous Integration and Continuous Delivery (CI/CD) are rarely taken into consideration and models are infrequently monitored for performance degradation or behavioural drifts.

These challenges ultimately limit the potential value of AI, particularly as the number of models being developed and deployed continues to grow. Without the right approach, teams quickly find themselves mired in break fixes for custom ETL, leaving little time for ongoing innovation.

MLOps addresses these issues by providing a framework for orchestrating and automating the ML pipeline:

- Continuous training enables rapid development and experimentation with new models.

- Continuous integration is supported by automated testing and modularised pipeline components.

- Improved collaboration and alignment between the development and production environments simplify handoffs, supporting continuous delivery.

- Automated triggering accelerates the deployment of newly trained models.

- Ongoing monitoring provides feedback on performance based on live data. This information can then be used to optimise and retrain models over time.

Layered into the broader CRISP-DM lifecycle, MLOps significantly improves the value of AI by providing greater scalability, improved maintenance and reduced risk.

Getting started with MLOps

By 2024, Gartner projects 75% of enterprises will shift from piloting to operationalising AI, driving a 5x increase in streaming data and analytics infrastructures. As the number of AI projects in production continues to rise, so will the need for a mature MLOps approach. The sooner your business identifies and adopts the right tools and processes to fit your needs, the better you’ll be positioned to compete in the next few years.

So how do organisations get started?

For those that haven’t begun deploying AI, discussions around MLOps should be included in the earliest stages of planning. Developing a preemptive strategy for operationalisation will enable your teams to start delivering business value faster. For those actively working to put AI into production, investing the time to optimise with MLOps will help to alleviate the obstacles to continuous integration and delivery.

By 2024, Gartner projects 75% of enterprises will shift from piloting to operationalising AI.

In either case, the first step is to bring all impacted teams together (including data scientists and engineers, infrastructure and DevOps teams, software developers, business analysts, architects and IT leaders) to begin researching, developing and documenting a comprehensive MLOps strategy.

The goal of this documentation is to guide every process and decision throughout the AI lifecycle. While the specifics will vary based on the organisation, scope and skill set, the strategy should clearly define how an ML model will move from stage to stage, designating responsibility for each task along the way. If there are existing process flows in place, these can be used as a starting point to identify challenges and develop solutions.

The following questions can also help to guide conversations as you build your MLOps strategy:

- What are your goals with AI? What performance metrics will be measured when developing models? What level of performance is acceptable to the business? How will you prioritise explainability, reproducibility and responsibility within your models?

- Do you have the right people? Does your current workforce have the right skill sets to run ML securely, as well as to update and maintain the model and any related edge devices over time? Consider whether you may need to hire additional staff or work with an outside consultant.

- Where will you test and execute your model? How will you create alignment between the development and production environments? How will data ultimately be ingested and stored? Depending on the source and complexity of the use case, there are varying benefits to running AI at the edge or in the cloud.

- Who is responsible for each stage in the lifecycle? Who will build the MLOps pipeline? Who will oversee implementation? Who will be responsible for ongoing performance evaluation and maintenance? How can visibility, collaboration and handoffs be improved across these different roles?

- How will models be monitored over time? How frequently will models be audited? How will models be updated to account for deterioration or anomalous data? How will you gather feedback from users to improve results?

Building your MLOps strategy is also an opportunity to evaluate the need for MLOps tools. Although tooling options are not quite as extensive as those available for DevOps, there are still platforms that can help you manage the machine learning lifecycle. Prioritise your desired features, capabilities and compatibility with your existing data ecosystem to narrow down the best option for your organisation.

An ongoing journey

Once your documentation and toolsets are in place, the conversation around MLOps should continue to evolve over time. As needs change or process gaps are discovered, they must be addressed, documented and integrated back into the MLOps strategy.

Because MLOps requires new ways of thinking and working, all affected groups will need to be trained to understand and embrace the change in roles and processes. Organisational change management can be a valuable, complementary tool to accelerate and improve outcomes with MLOps.

There's no magic bullet to instantly perfect AI operationalisation. But investing the effort and resources upfront will be well worth the value of establishing a more repeatable, scalable AI lifecycle. By equipping your teams to consistently develop, deploy and maintain higher quality AI solutions, MLOps will ultimately help to position your business on the forefront of innovation for years to come.